Overview

Creating a great user experience

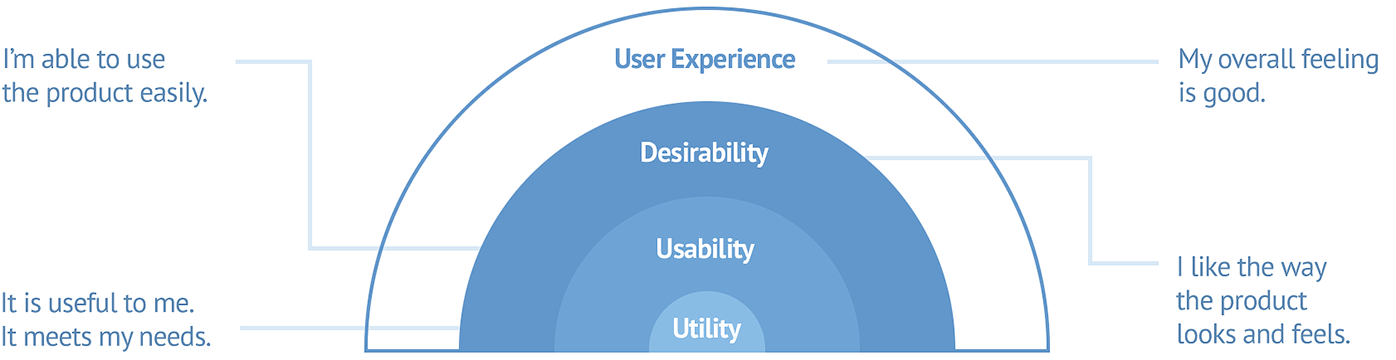

Then follows usability: the user-facing parts of the product or service – in other words, its user interface – has to be designed in a way that makes the product or service easy to understand and effortless to use.

It helps to break down usability into further integral parts:

- learnability: how easy is it for users to accomplish basic tasks the first time they encounter the product or service?

- efficiency: once users have learned the product, how quickly can they perform tasks?).

- memorability: when users return to the product or service after a period of not using it, how easily can they reestablish proficiency?

- errors: how many errors do users make, how severe are these errors, and how easily can they recover from the errors?

This excellent framework has been first suggested by NN/g, a leading voice in the user experience field whose ideas and tools our UX team has been building on for well over a decade.

This applies not only to publicly available products or services but to internally used ones as well. For intranets, usability is a matter of employee productivity. Time employees waste being lost on your intranet or pondering difficult instructions is money you waste by paying them to be at work without getting work done.

Only once we have made sure a product or service is useful and usable, we need to turn to the next layer – desirability, which basically is about ensuring the users like the way the product looks and feels.

If usability has evolved into a sort of an applied science over the years, designing products and services to be desirable is still largely a form of art – even though the desirability of a product can also by tested and quantified both at prototype and final stages.

And all these layers contribute to the resulting user experience – the overall feeling a user has about a digital product or service.

Our UX development process: approaches and tools we use

In Discovery phase, we seek to understand and define the business environment, goals and users through online research, interviews, user analysis, competitor analysis, storyboards, brainstorming, personas…

Then in the Wireframes and Prototypes development phases we seek to show the expected behaviour and functionality and explain how users will interact with a website or an application.

We then summarise the findings and get back to the drawing board to improve the product before the next iteration of validation and testing.

Examples of our past UX development and usability testing engagements

A leading regional P&C insurance company tasked Metasite with developing the UX for its next generation digital insurance self-service offering.

Metasite UX team analyzed the client’s existing customer base, identifying motivations for using online self-service, defining personas and selecting real users for testing of prototypes and designs.

We then employed card sorting, iterative workflows testing, emotional response testing and other methodologies for designing, testing and refining the new digital self-service solution.

Metasite UX research team carried out nine annual research studies aimed at analysing, evaluating and benchmarking the e-service offerings of every bank present in the Baltic region.

The 280 pages, 9000+ data points strong annual Baltic E-Banking Report quickly turned into a de-facto regional yardstick used by Baltic banks to benchmark themselves against competitors in the areas of e-banking functionality, clarity, convenience and online customer service responsiveness.

Baltic E-Banking Report 2006-2011 editions have been made freely available for downloading and can be obtained here.

Arnold Dapkus

Arnold Dapkus Aldas Kirvaitis

Aldas Kirvaitis

Comments are closed.